Using Argo Workflows to build container images with Kaniko and push them to different registries. This post goes over sending the image to multiple ECR repositories in different AWS partitions but it could easily be switched out with another registry (Docker, GitHub, etc.).

Using AWS EKS with IAM OIDC providers set up in two accounts, allows different pods/service accounts to assume permissions in each account.

TL;DR — Code can be found here on GitHub

The reason I am creating this is because recently I had to implement this solution at work to have ECR images stored across multiple AWS partitions. There are no built-in ways to replicate images with ECR across partitions (commercial/govcloud/china/europe?) or pull across them with IAM/resource policies. The best option I decided would be to have it pushed during the CI process instead of a pull/push job.

Since my 2023 article on Tekton I’ve switched to using Argo Workflows. I switched to using Argo Workflows because it has a better UI, making it easier for non-Kubernetes savvy developers to use. It also allows the management of CRDs and workflows with RBAC. It also integrates well with ArgoCD (Dex) so we can have SSO (no more basic auth).

Enough exposition

Kaniko

Kaniko is a tool for building container images inside Kubernetes without the docker runtime. (only a container runtime)

You only need a Dockerfile to build the image, and optionally, a destination to push it to. (--no-push)

In my case I’ll be building an app, pushing to the awesome temporary registry ttl.sh, and saving the build as a tar file.

docker run --rm -v $HOME/repo/project/app:/workspace \

gcr.io/kaniko-project/executor:latest \

--destination "ttl.sh/infrasec-kaniko:1h" \

--tar-path image.tar \

--context dir:///workspace/

The next logical step is to convert this to a pipeline (and gitops so every bugfix needs a pull request /s).

This Argo Workflows Template assumes that a persistent volume containing the source code already exists. This can be done in the pipeline’s previous step, which then calls this workflow.

apiVersion: argoproj.io/v1alpha1

kind: WorkflowTemplate

metadata:

name: docker-build

namespace: argo

spec:

arguments:

parameters:

- name: build_path

- name: destination_image

- name: image_tag

- name: source_path

value: /source

- name: source_workflow

entrypoint: kaniko

serviceAccountName: argo-docker-build

templates:

- name: kaniko

container:

args:

- '--context={{ inputs.parameters.source_path }}/{{inputs.parameters.build_path }}'

- '--destination={{ inputs.parameters.destination_image }}:{{ inputs.parameters.image_tag }}'

- '--tar-path={{ inputs.parameters.source_path }}/{{inputs.parameters.build_path }}/image.tar'

image: gcr.io/kaniko-project/executor:v1.23.2

volumeMounts:

- mountPath: '{{ inputs.parameters.source_path }}'

name: source-code

inputs:

parameters:

- name: build_path

- name: destination_image

- name: image_tag

- name: source_path

volumes:

- name: source-code

persistentVolumeClaim:

claimName: '{{ workflow.parameters.source_workflow }}-source-code'

The image.tar will be stored in the same location as the Dockerfile, we’ll use it in another step.

ECR IAM

For Kaniko to authenticate to the destination registry, in my case ECR we need to grant the service account (argo-docker-build) IAM permissions. Follow the steps in Assign IAM roles to Kubernetes service accounts to set up your role.

Below is an example of an IAM Policy that grants permissions to ECR.

{

"Statement": [

{

"Action": [

"ecr:BatchCheckLayerAvailability",

"ecr:BatchGetImage",

"ecr:CompleteLayerUpload",

"ecr:GetDownloadUrlForLayer",

"ecr:InitiateLayerUpload",

"ecr:PutImage",

"ecr:UploadLayerPart"

],

"Effect": "Allow",

"Resource": "arn:aws:ecr:us-east-1:0123456789:repository/*",

"Sid": "PublishImage"

},

{

"Action": "ecr:GetAuthorizationToken",

"Effect": "Allow",

"Resource": "*",

"Sid": "GetAuthToken"

}

],

"Version": "2012-10-17"

}

Crane

Now we need a way to push the image tar to another container registry. The best tool I found was Crane; thanks to this helpful stackoverflow comment.

We can use the Crane CLI to push our image to a registry.

crane push image.tar ttl.sh/infrasec-crane:1h

To push to a registry (in my case AWS ECR) that requires authentication we must first login.

aws ecr get-login-password --region us-east-1 | crane auth login -u AWS --password-stdin 0123456789.dkr.ecr.us-east-1.amazonaws.com

crane push image.tar 0123456789.dkr.ecr.us-east-1.amazonaws.com/infrasec-crane:latest

Custom Wrapper

To have this work with Kaniko, we need to run it as a container. Crane is a binary for a golang library go-containerregistry so we can write a custom binary wrapper and containerize the program.

The code is available here on GitHub in my website reference repository.

The code performs the following actions:

- Authenticates with AWS ECR to get and gets the login password

- Load the tarball file

- Pushes the image to the registry

The program expects three environment variables:

| Name | Description | Example Value |

|---|---|---|

| AWS_ECR_ENDPOINT | The endpoint of the ECR registry | 0123456789.dkr.ecr.us-east-1.amazonaws.com |

| IMAGE_TAR | Tar file path | image.tar |

| IMAGE_URI | Image URI (registry, repo, tag) | 0123456789.dkr.ecr.us-east-1.amazonaws.com/infrasec-crane:latest |

Argo Workflows

Now we can put together Kaniko and Crane in an Argo Workflows Template!

We will need another service account with an AWS IAM Role for the Crane pod. The same instructions can be followed by only changing the account and/or partition. A new OIDC provider will need to be created in your second destination AWS account as well.

Next, we will need to create a workflow template for the Crane task.

apiVersion: argoproj.io/v1alpha1

kind: WorkflowTemplate

metadata:

name: crane

namespace: argo

spec:

arguments:

parameters:

- name: build_path

- name: destination_image

- name: ecr_repository

- name: image_tag

- name: source_path

value: /source

entrypoint: crane

serviceAccountName: argo-crane-push

templates:

- name: crane

container:

image: 0123456789.dkr.ecr.us-east-1.amazonaws.com/infrasec-crane:latest

env:

- name: AWS_ECR_ENDPOINT

value: '{{ inputs.parameters.ecr_repository }}'

- name: IMAGE_TAR

value: '{{ inputs.parameters.source_path }}/{{ inputs.parameters.build_path }}/image.tar'

- name: IMAGE_URI

value: '{{ inputs.parameters.ecr_repository }}/{{ inputs.parameters.destination_image }}:{{ inputs.parameters.image_tag }}'

volumeMounts:

- mountPath: '{{ inputs.parameters.source_path }}'

name: source-code

inputs:

parameters:

- name: build_path

- name: destination_image

- name: ecr_repository

- name: image_tag

- name: source_path

podSpecPatch: '{"serviceAccountName": "argo-crane-push"}'

volumes:

- name: source-code

persistentVolumeClaim:

claimName: '{{ workflow.parameters.source_workflow }}-source-code'

Note: If your destination is in another AWS region, you may need to specify an environment variable for

AWS_REGION.

Archive logging must be disabled globally and then enabled per project if you are in a different AWS partition.

Argo Workflows will use the source workflow’s service account for all subsequent workflows and pods, even if a different service account is specified. For example, if the source workflow uses the argo-docker-build service account, all subsequent workflows and pods will also use argo-docker-build, even if you specify a different service account like argo-crane-push in the subsequent steps.

The workaround for this is to patch the service account with podSpecPatch: '{"serviceAccountName": "argo-crane-push"}' (ref)

Now to combine it all into one workflow

apiVersion: argoproj.io/v1alpha1

kind: WorkflowTemplate

metadata:

name: build-docker

namespace: argo

spec:

arguments:

parameters:

- name: build_path

- name: destination_image_1

- name: destination_image_2

- name: ecr_repository_2

- name: image_tag

- name: source_workflow

entrypoint: docker

onExit: exit-handler

serviceAccountName: argo-docker-build

templates:

- name: docker

dag:

tasks:

- name: kaniko

templateRef:

name: docker-build

template: kaniko

arguments:

parameters:

- name: build_path

value: "{{ workflow.parameters.build_path }}"

- name: destination_image

value: "{{ workflow.parameters.destination_image_1 }}"

- name: image_tag

value: "{{ workflow.parameters.image_tag }}"

- name: source_path

value: "/source"

- name: source_workflow

value: "{{ workflow.parameters.source_workflow }}"

- name: crane

depends: kaniko.Succeeded

templateRef:

name: crane

template: crane

arguments:

parameters:

- name: build_path

value: "{{ workflow.parameters.build_path }}"

- name: destination_image

value: "{{ workflow.parameters.destination_image_2 }}"

- name: ecr_repository

value: "{{ workflow.parameters.ecr_repository_2 }}"

- name: image_tag

value: "{{ workflow.parameters.image_tag }}"

- name: source_path

value: "/source"

- name: notification

depends: crane.Succeeded || crane.Failed

templateRef:

name: slack-notification

template: post

arguments:

parameters:

- name: image_tag

value: "{{ workflow.parameters.image_tag }}"

- name: webhook_secret

value: build-notification

- name: exit-handler

steps:

- - name: notify-on-failure

when: "{{ workflow.status }} != Succeeded"

templateRef:

name: build-notification

template: post

arguments:

parameters:

- name: workflowName

value: "{{ workflow.name }}"

- name: webhook_secret

value: build-notification

volumes:

- name: source-code

persistentVolumeClaim:

claimName: "{{ workflow.parameters.source_workflow }}-source-code"

Note: This workflow is designed to be used as a sub-workflow, similar to this example, and it creates another Workflow as its final step.

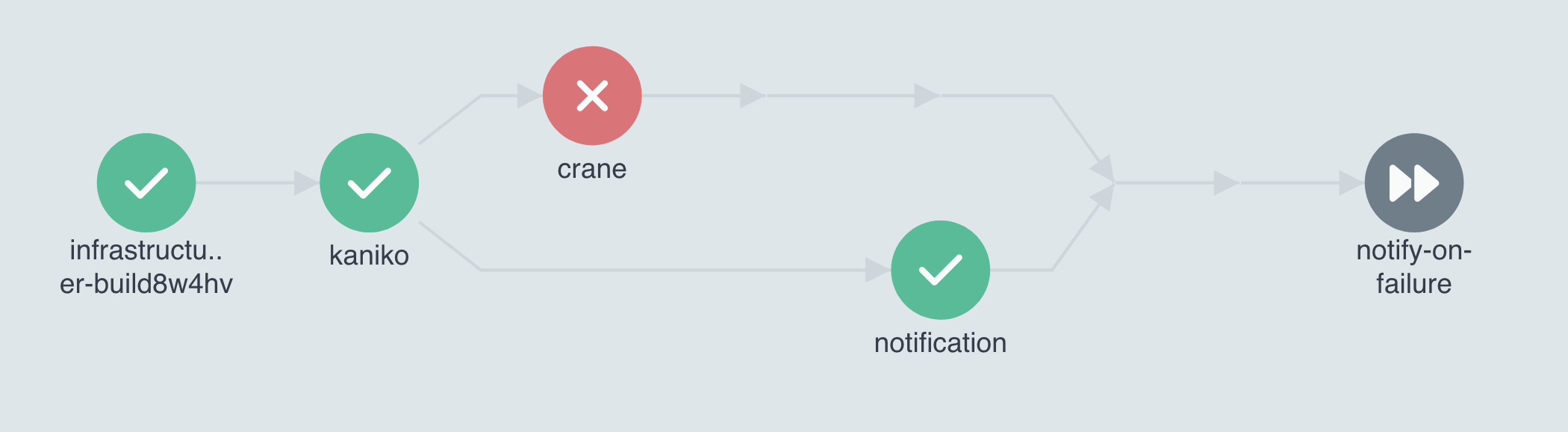

- There is an exit handler that will send a notification when the workflow does not succeed. This is just here to show how it can work with the dag.

- The workflow uses a DAG (directed-acyclic graph), which allows the pipeline to be more dynamic.

- It will first run the task to build the image with Kaniko which is saved as a tar and pushed to the first container registry.

- Next, if the Kaniko task succeeds run the Crane workflow which will push the tar to the second AWS ECR repository.

- If the Crane task succeeds or fails push a notification that the image was built. (ref)

- This is intentional so it fails open in case the second repository does not exist.

Now all we need to do is invoke the workflow with the following parameters.

| Parameter | Description | Example Value |

|---|---|---|

| build_path | Location of code in PVC | /project/app |

| destination_image_1 | First destination ECR repository | 0123456789.dkr.ecr.us-east-1.amazonaws.com/infrasec-project |

| destination_image_2 | Second destination ECR repository | 9876543210.dkr.ecr.us-west-2.amazonaws.com/infrasec-project |

| ecr_repository_2 | Second ECR registry | 9876543210.dkr.ecr.us-west-2.amazonaws.com |

| image_tag | Container image tag | latest |

| source_workflow | Name of the source workflow, used to find the PVC | git-clone |

Note

If something does not work, please reach out or open a GitHub issue.

Disclaimer: I have not fully tested the workflows but they are based on actual workflows I run in production.